Enterprises embarking on a data migration are hoping it will bring tangible benefits to the business. Unifying data under a centralized database and optimization are among many of the key business value-adds stakeholders look to achieve with implementing and migrating new systems.

Yet despite the benefits, leaders approach migrations with trepidation – and rightfully so. According to a recent Gartner® report, “through 2023, more than 50% of life insurance policy administration system data migrations will exceed their budget and timeline”.*

Why do over half of these projects fail? We’ve found that the common denominator is almost always the data – poor quality legacy data, outdated technology, and countless formats and schemas and systems.

Focus Early on Data Quality

A crucial mistake that data migration teams can make is to underestimate the complexity and time required for a life insurance data migration effort. With no visibility into the data, it can be hard to predict – and control – project timelines. According to the Gartner report, “data migration can take anywhere from eight to 30 months to complete, or even longer.” That’s quite the wide range.

Data quality issues are a key contributor to migration project overruns, in both costs and project timeline. When it comes to large-scale projects like data migrations, organizations too often focus purely on moving data from one system to another, rather than revisiting their data management strategy as a whole. Otherwise, how do you know you’re moving data in a way that matters (and provides value) to the business?

Learn how to apply data quality techniques to drive business outcomes. [Free Resource]

Data Migration Best Practices

Migrations are not simply one-to-one movements of data. These large-scale, complex initiatives require a strategy that leaves room for data remediation and other obstacles.

Simple data management best practices like the below help establish a baseline and assess inconsistencies ahead of time:

- Cleanse and remediate data accuracy errors before migration begins

- Iterative data cleansing

- Phased migration approach

- A Unified Data Management Platform

- Data Governance

But it can be difficult to navigate all the many tools and theories on the market today and know which is right for you. Every data migration is a bit different and leveraging the experience of an organization like Syniti is invaluable when it comes to overcoming migration risks and generating value.

“After over 25 years of migrating data for all kinds of businesses, we’ve learned many people, no matter the type of database migration, often make the mistake of approaching it purely from a technical perspective,” says Leonard Maganza, CCO at Syniti.

-

Cleanse and remediate data accuracy errors before migration begins. Only move what you need

For a data migration project with few errors and faster time-to-completion, start off your data migration with high-quality, validated data. By reducing the migration’s scope, project teams can minimize the scale and complexity of the effort. This reduces risk, time and cost throughout the life of the project.

Analyze data quality using data profiling techniques to identify quality issues early in the migration effort. Don’t make assumptions about the quality of legacy data. The goal should be 99+% data quality by the time of go-live.

Learn how to apply data quality techniques to drive business outcomes. [Free Resource]

-

Iterative Data Cleansing

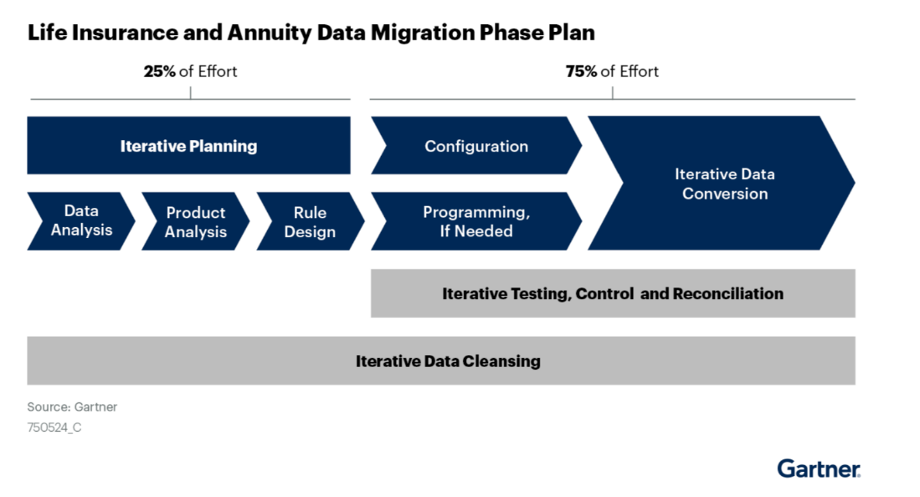

According to Gartner, data migration plans for life insurance organizations should comprise of 25% planning, 75% testing, control and reconciliation for a 100% iterative data cleansing (see below graphic). As per this Gartner report, “Data cleansing activities occur throughout the life cycle, but intensify as initial data conversion tests are run.”*

With data management best practices already integrated, data cleansing is easy to maintain with prebuilt content and dashboards and automated data checks. Platforms ready with out-of-the-box data quality rules, reporting, and dashboards can help users discover millions of dollars in savings.

When data quality capabilities are coupled with features like data cataloging and governance, these data cleansing initiatives are able to continue well past go-live. With knowledge re-use, future data projects can be accelerated by 50%.

-

Phased Migration Approach

The one-and-done, “Big Bang” approach may seem like the faster path forward during a migration, but given the complexity of many modernization efforts or system consolidations, dividing the effort into a series of phases could help reduce complexity and improve quality.

Agile data migrations deliver faster, lower risk migrations with 46% reduction in migration time, as well as a 38% reduction in migration costs. For a successful BORING GO LIVE® data center migration, you need to invest in a repeatable, multi-phased migration approach in order to move not just the data but the business forward.

Learn more about the 8 steps to a frictionless data migration [Free Resource].

-

A Unified Data Management Platform

A suite of available and proven data migration tools, such as data discovery, mapping and translation tools, can minimize or eliminate custom programming and automate migration efforts to the fullest extent possible. What’s more, a centralized platform provides a single point of truth for both stakeholders, business users, and developers alike. All data migration and data quality efforts exist under the same rules, workflows, and dashboards for better project transparency, bridging the gap between IT and business users, making it easier to perform iterative data cleansing throughout the life of the project.

“We now have a predictable, reliable toolset, team and process that can be reused to maintain data quality in production, and can be leveraged by other data quality, cleansing and governance initiatives.”

Andrea Smith, Program Manager, SAP Convergence IT, Zurich Insurance

5. Data Governance

Data governance issues involved with the data in a migration effort present both the greatest challenges and the greatest opportunities for life insurance CIOs. Highly regulated industries such as life insurance, finance, healthcare, all rely on data governance to maintain regulatory compliance.

For complete project transparency, consolidate critical rules, mappings, design decisions, audit logs, and assigned tasks in a single system for one version of the truth. A centralized locale for knowledge sharing encourages enterprise-wide collaboration for all stakeholders and eliminates data inconsistencies that often complicate integrations. Complete tracking and visibility make it easy to quickly make course corrections or change scope. With this level of project transparency, organizations can consistently experience a positive ROI in under 12 months.

Like many industries, data migrations within life insurance and financial services can be particularly painful due to regulatory compliance challenges, legacy systems, and a lack of visibility into data issues. Organizations should leverage a repeatable migration framework, along with the right technology made to support it.

With data tools to streamline every step of the data migration process, including Data Catalog and Governance, Replication, and Data Quality, Syniti Knowledge Platform delivers faster, frictionless enterprise data migration from one trusted cloud data platform.

*Gartner, “3 Ways to Lower Risk in Life Insurance Data Migration” July 12, 2022.

GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally and is used herein with permission. All rights reserved.